The Road to Exascale: Can Nanophotonics Help?

Every human endeavor needs its own equivalent of the Holy Grail – a tantalizing, desirable and often somewhat mystical objective that beckons enticingly from just beyond the far horizon.

In the world of HPC, we have exascale. Realizing computing capabilities in the next five to ten years that represent a thousand fold increase over today's petaflop systems is not an impossible dream; it's just very difficult to achieve, especially if you extrapolate from today's technology.

In the world of HPC, we have exascale. Realizing computing capabilities in the next five to ten years that represent a thousand fold increase over today's petaflop systems is not an impossible dream; it's just very difficult to achieve, especially if you extrapolate from today's technology.

This is why last summer DARPA (Defense Advanced Research Projects Agency) issued a Broad Agency Announcement (the Agency's equivalent of an RFP) to develop exascale prototype systems based on revolutionary new design concepts. The BAA stated flatly, "To meet the relentlessly increasing demands for greater performance and higher energy efficiency, revolutionary new computer systems designs will be essential…UHPC systems designs that merely pursue evolutionary development will not be considered."

The four organizations selected to participate in what DARPA calls its Ubiquitous High Performance Computing (UHPC) program are Intel, NVIDIA, Massachusetts Institute of Technology Computer Science and Artificial Intelligence Laboratory, and Sandia National Laboratory. An Applications, Benchmarks and Metrics team to evaluate the various UHPC systems under development is being lead by Georgia Institute of Technology.

The 800 pound gorilla in the room is power. If you concentrate on ramping up current systems to break the petaflop barrier, the current conventional wisdom states that you will have to build a nuclear reactor next to your datacenter just to handle the exascale system's power requirements.

DARPA wants its contractors to be at the forefront of a revolution in computing – one that will lead to the development of radical new computer architectures and programming models. Not only should these new systems have a 1,000 times the performance of today's systems and be easier to use, they should be a 1,000 time more energy efficient.

A conventional HPC system based on CPUs consumes about 5nJ/flop – about 5MW for a one petaflop system. Even with a improvements in energy efficiency over the next five to ten years, an exascale system based on extrapolations of current technology will require somewhere in the neighborhood of 1.25GW of power, far more than the 50MW regarded as a practical upper limit for such a system.

Naturally NVIDIA is viewing GPUs as "one of the most promising paths to exascale computing," according to Bill Dally, the company's chief scientist and vice president of research. But even with GPU in the mix, a large power gap still looms as one of the major technology hurdles to be overcome.

Let There Be Light

Could a solution be arriving from an unexpected source? Just last week, Stanford University announced it had developed a new lightening-fast, efficient nanoscale data transmission device that uses thousands of time less energy than current technologies.

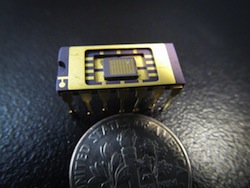

According to the release, "A team at Stanford's School of Engineering has demonstrated an ultrafast nanoscale light-emitting diode (LED) that is orders of magnitude lower in power consumption than today's laser-based systems and is able to transmit data at the very rapid rate of 10 billion bits per second.

According to the release, "A team at Stanford's School of Engineering has demonstrated an ultrafast nanoscale light-emitting diode (LED) that is orders of magnitude lower in power consumption than today's laser-based systems and is able to transmit data at the very rapid rate of 10 billion bits per second.

The researchers say it is a major step forward in providing a practical ultrafast, low-power light source for on-chip data transmission." Their findings have been published in the most recent edition of the journal Nature Communications.

Earlier this year the Stanford researchers had come up with a nanoscale laser that was also very efficient and fast, but it only operated at temperatures below 150 degrees Kelvin – a trifle cool for your standard datacenter. However, the new laser-based system can operate at room temperature, making it a prime candidate for next generation computer chips. The researchers say the device is a major step forward in providing a practical, ultrafast, low-power light source for on-chip data transmission.

The new device is a miracle of engineering that employs the scientific discipline known as nanophotonics. The Stanford team has inserted "quantum dots," tiny flecks of material made of indium arsenide which, when pulsed with electricity, emit light. A photonic crystal surrounding the dots acts as a mirror that bounces the light into the center of the LED and forces it to resonate at a single frequency.

Of interest to exascale designers is the fact that tests of the device show it can transmit information 10X faster than conventional devices while consuming 1,000 times less energy.

According to the Stanford press release, "In tech-speak, the new LED device transmits data, on average, at 0.25 femto-joules per bit of data." By comparison, today's typical "low" power laser device requires about 500 femto-joules to transmit the same bit.

"Our device is some 2,000 times more energy efficient than best devices in use today," said Jelena Vuckovic, an associate professor of electrical engineering at Stanford and the lead on the project.

Exascale and Manufacturing

Suppose the new device does help speed the quest for the Grail – the development of exascale systems that are not only superfast, but require very little in the way of power? Certainly a boon for the large labs investigating such esoteric subjects as core-collapse supernovas, the make up of dark matter, or the design of advanced fusion systems. But what about manufacturers – companies like Boeing, John Deere, Caterpillar and others that are already making good use of supercomputers and advanced digital manufacturing software?

We put that question to Merle Giles, the Director of Business and Economic Development at the National Center for Supercomputer Applications (NCSA). Many of those large manufacturers are his customers. Here's what Giles had to say:

"As is typical, advances like these have a trickle-down effect and, when embraced by the user community, turn into competitive advantage. Advantages tend to accrue first to the large companies that have in-house expertise to adopt new technologies quickly, or to companies of any size that stay close to organizations that make a business out of quickly understanding new technologies.

"As is typical, advances like these have a trickle-down effect and, when embraced by the user community, turn into competitive advantage. Advantages tend to accrue first to the large companies that have in-house expertise to adopt new technologies quickly, or to companies of any size that stay close to organizations that make a business out of quickly understanding new technologies.

"In the manufacturing space, I can foresee that low-power data transmission could accelerate data-intensive applications, thus alleviating a common bottleneck. Some of NCSA's Fortune50 partners, for instance, claim that HPC is no longer the primary bottleneck to productivity. Rather, the set-up, collection, and transmission of data has a lead-time that far exceeds computation itself. Low power exascale system components, therefore, may reduce bottlenecks by lowering the costs of bringing large-scale computation closer to the source of the data.

"An example would be MRI machines. One of the reasons it takes two weeks for MRI analysis from a hospital is that the advanced data analysis is not done locally. Computational physics is central to MRI analysis, which is exceedingly difficult, and visual rendering of the physics requires serious compute time. More computation and data analysis capability at, or near, the MRI device would speed up patient diagnosis. Shorter diagnosis has clear health implications, but it also benefits the manufacturer because increased turnover of patients lowers overall costs. Lower costs create incentives to build more units.

"So while exascale computing may become a direct computational resource to be used by the world's largest companies, its should be expected to have valuable derivative benefits to the entire manufacturing sector."

The Quest Continues

Nanophotonics is just one of the breakthrough technologies that are part of the never-ending quest to build faster, more powerful supercomputers. However, we are not nearing the end of the story. We may develop exascale computers in the not too distant future, but there will always be a new Holy Grail just over the horizon, and no shortage of avid researchers to continue the quest.